- 2021 Unibet Deepstack Open Online Poker Tournament will be held from 3rd till 8th of February.

- TOURNAMENT DAY TIME BUY-IN. Deepstack NLH (Re-Entry) $20,000 GUARANTEED Wed, February 19: 11am: $100 + $20: RESULTS: Deeper Stack NLH (Re-Entry) $20,000 GUARANTEED Thu, February 20.

DeepStack is an AI server you can easily install, use completely offline or on the cloud for Face Recognition, Object Detection, Scene Recognition and Custom Recognition APIs to build business and industrial applications!

DeepStack GPU Version serves requests 5 - 20 times faster than the CPU version if you have an NVIDIA GPU.

NOTE: THE GPU VERSION IS ONLY SUPPORTED ON LINUX

Before you install the GPU Version, you need to follow the steps below.

Step 1: Install Docker¶

If you already have docker installed, you can skip this step.

Step 2: Setup NVIDIA Drivers¶

Install the NVIDIA Driver

Step 3: Install NVIDIA Docker¶

The native docker engine does not support GPU access from containers, however nvidia-docker2 modifies your docker installto support GPU access.

Run the commands below to modify the docker engine

If you run into issues, you can refer to this GUIDE

Step 4: Install DeepStack GPU Version¶

Step 5: RUN DeepStack with GPU Access¶

Once the above steps are complete, when you run deepstack, add the args –rm –runtime=nvidia

Step 6: Activate DeepStack¶

The first time you run deepstack, you need to activate it following the process below.

Once you initiate the run command above, visit localhost:80/admin in your browser.The interface below will appear.

You can obtain a free activation key from register.deepstack.cc https://register.deepstack.cc

Enter your key and click Activate Now

The interface below will appear.

This step is only required the first time you run deepstack.

DeepStack is the first AI computer programme to beat professional poker players in a game of hands-on no-limit Texas hold’em, a team of researchers claim in a research paper out this week.

The use of games to train and test AI is prolific. Surpassing human-level performance in a game is considered an impressive feat, and a mark of progression for machine intelligence. DeepStack had an average win rate of more than 450 mbb/g (milli big blinds per game) over 44,000 hands of poker – a high number considering 50mbb/g is a respectable margin in professional poker.

Last year, Mark Zuckerberg announced his AI team was building an agent to play the ancient game of Go, but was upstaged when news broke that Google’s AI arm, DeepMind, had already beaten Lee Sedol, a professional player, in a series of matches streamed on live TV with AlphaGo.

Now, researchers are battling it out over poker. The paper [PDF], written by researchers from the University of Alberta in Canada and the Charles University and Czech Technical University in the Czech Republic, is currently under peer review but has been published unofficially on arXiv.

It was released in the same week that academics from Carnegie Mellon University in Pennsylvania announced their AI poker bot, Libratus, will play against top Heads-Up No-Limit Texas Hold’em poker players at Rivers Casino in Pittsburgh, Pennsylvania.

A live stream of the Brains vs Artificial Intelligence tournament shows that Libratus is leading at the time of writing on Friday, after beating its human opponents on the first day of the competition.

Poker is a difficult game for machines to master. Unlike chess, Jeopardy!, Atari video games or Go, no-limit Texas hold’em is considered an imperfect information game. Players do not have identical information about the current state of the game, as they withhold private information from one another.

AI poker intuition

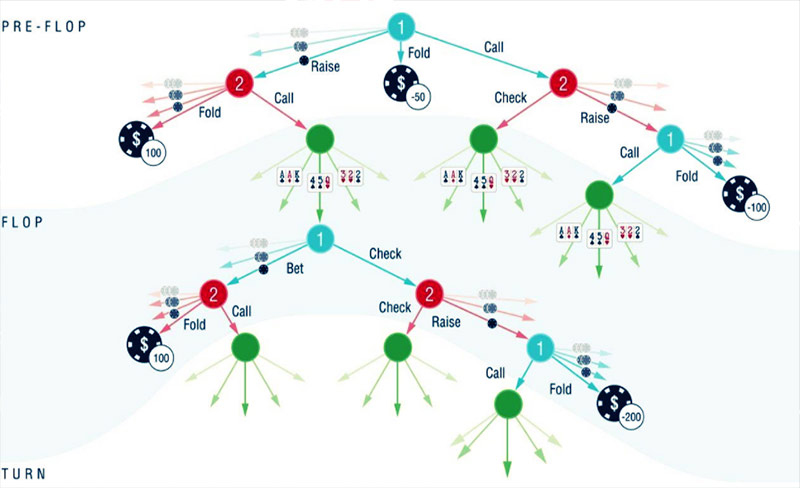

A game between two players betting any number of chips produces 10160 possible situations – a number too large for a computer to handle. To skirt around the problem, DeepStack “squeezes” it down to 1014 abstract situations that are learned by playing against itself.

Like DeepMind’s AlphaGo, DeepStack picks the best move to take by drawing on a bank of possible moves by calculating what types of scenarios are more likely, something the researchers compare to intuition: “A gut feeling of the value of holding any possible private cards in any possible poker situation.”

The programme’s “intuition” has to be trained using two neural networks. One learns to estimate the counterfactual – or “what-if” values after the first three public cards are dealt, and the other neural network recalculates the values after the fourth public card is dealt.

Deepstack Poker Club

Simplifying the number of situations means the decision tree computed by DeepStack is effectively pruned, and it’s easier to approximate the Nash equilibrium – a solution in game theory which states that no player has an incentive to change his or her strategy – continuously, after each round.

Deepstack For Windows

Since it doesn’t have an overarching strategy decided before the game, it doesn’t need to keep tabs on all 1014 abstract situations – it can solve the decision tree in under five seconds.

“The DeepStack algorithm is composed of three ingredients: a sound local strategy computation for the current public state, depth-limited lookahead using a learned value function over arbitrary poker situations, and a restricted set of lookahead actions,” the paper said.

DeepStack in luck

A closer look at the results shows that DeepStack did not win by a statistically significant amount against every player. Eleven out of the 33 professional poker players completed the requested 3,000 games, and for all but one of the 11 games, DeepStack didn’t win by a remarkable amount – the top human poker player only lost by about 70 mbb/g.

DeepStack “was overall a bit lucky,” the researchers admitted, but taking into account slight adjustments, its estimated performance win was still a sizeable 486 mbb/g instead of the initial 492 mbb/g.

Although DeepStack’s opponents aren’t the best poker players, the result is still impressive, Miles Brundage – who is not involved in this research and is an AI Policy Research Fellow at the Future of Humanity Institute at the University of Oxford – told The Register.

“It still very decisively beat new players – and is reasonably good – considering it’s not the best possible neural net. It seems very scalable and there are a lot of obvious ways to improve it, like adding more GPUs,” Brundage said.

With seven layers, each equipped with 500 nodes, it’s not the biggest neural network. Part of DeepMind’s AlphaGo success in mastering Go and beating the world’s best players is due to its larger and more powerful neural network, Brundage explained.

In comparison, AlphaGo was trained with four neural networks each with tens of layers and many nodes.

DeepStack and Libratus seem to be making great strides in poker, but both AI poker bots can only play against one other player. The next challenge to conquer is to build an AI that can play against multiple players all at once, and maybe even bluff. ®